Research

My Research

SEARCH MEDIA

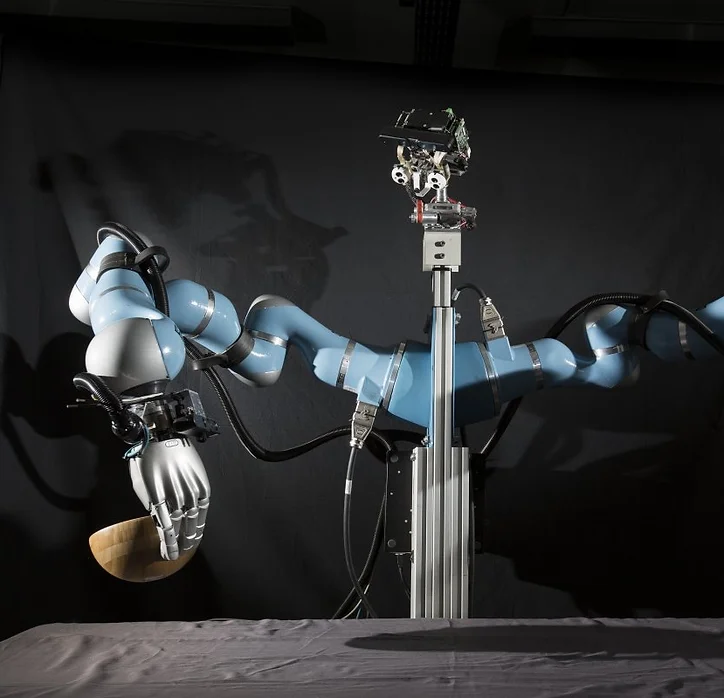

Robot Grasping

Picture this scenario: You’re standing in front of a fridge, reaching for an object that’s partially hidden from view. But here’s the interesting part – instead of solely relying on your vision to locate and grasp the object, you instinctively use your sense of touch. You explore with your fingertips, feeling around until you uncover its exact position, and without even a second thought, your hand swiftly moves in to seize it. Now, let’s bring this human-like capability to robots. Unlike most proposed approaches that rely on poking the object to find it, we take a different, more intelligent approach. Inspired by the inherent adaptability of human behavior, we design our robots to minimize uncertainty. They delicately approach the object, strategically planning their trajectory so that any contact generates valuable information about the object’s location. With minimal adjustments, the robot achieves a successful grasp. It’s this seamless blend of human-inspired techniques and cutting-edge technology that propels us forward in creating truly advanced and adaptable robotic systems.

Robot Planning in Complex Spaces

Are you ready for an exciting breakthrough in control problems? Introducing the belief space planning approach – a game-changing alternative that brings new life to partially observable control problems. In recent years, its application to robot manipulation problems has gained significant momentum, but hold on, because we’re taking it even further. While others have only explored this approach in simplified control problems, we dare to dive headfirst into the thrilling realm of planning dexterous reach-to-grasp trajectories under the veil of object pose uncertainty. Brace yourself for a remarkable journey as we unlock the true potential of belief space planning and revolutionize the way robots navigate complex and dynamic environments.

Motion Prediction

Imagine a future where robots possess the remarkable ability to anticipate how objects will respond to their touch. This capability is essential for seamless and effective manipulation tasks. Introducing our groundbreaking approach: a novel way to make robust predictions on previously unseen objects. By harnessing the power of conditioning predictions on local surface features, we unlock the potential for generalization across objects with diverse shapes. Get ready to witness a leap forward in the realm of robotic predictions, as we pave the way for more intelligent, adaptable, and perceptive machines.

Active Exploration

Information on object shape is a fundamental parameter for robot-object interaction to be successful. However, incomplete perception and noisy measurements impede obtaining accurate models of novel objects. Especially when vision and touch simultaneously are envisioned for learning object models, a representation able to incorporate prior shape knowledge and heterogeneous uncertain sensor feedback is paramount to fusing them in a coherent way. Moreover, by embedding a notion of uncertainty in shape representation allows to more effectively bias the active search for new tactile cues.

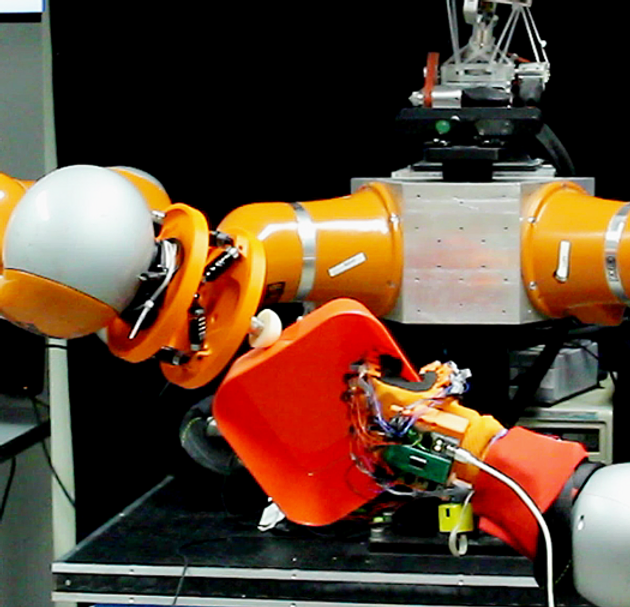

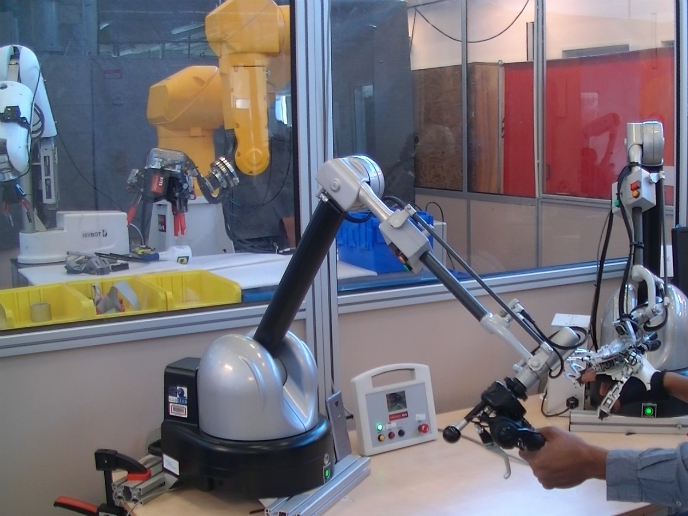

physical Human-Robot Interaction (pHRI)

Designing robotic assistance devices for manipulation tasks can be a real puzzle to solve. But fear not, because this research is all about taking it to the next level! We’re focused on making semi-autonomous robots – you know, the ones that can be controlled by humans – even better. Our big idea is to create a system that’s super smart and aware of its surroundings and the user’s needs. With this knowledge, it’ll be able to make the best decisions on how to assist the user. Exciting, right?

Intelligent Assistant for Wearable Robotics

Imagine a world where wearable robots become your trusty sidekicks, helping you with every move you make. Well, that’s exactly what we’re working on! We’re diving deep into the realm of intelligent, semi-autonomous robotic systems to support patients in using their upper-limb prosthetics.

Here’s the thing: there are so many people out there – more than 1% of the population in the UK, to be exact – who have undergone upper limb amputations. But you know what? Over 55% of them have decided to ditch their prosthetics because they just didn’t meet their expectations. It’s a tough pill to swallow when the psychological and physical toll is overwhelming, while the functionality falls short.

But fear not! We have a game-changing plan. We’re creating a collaborative system where the patient and the prosthetics work together in perfect harmony. It’s like having a robotic buddy that understands your every need. By infusing prosthetics with the power of autonomous intelligence, we’re revolutionizing the field and making dexterous prosthetic devices more appealing than ever before. Get ready for a future where man and machine come together as one!

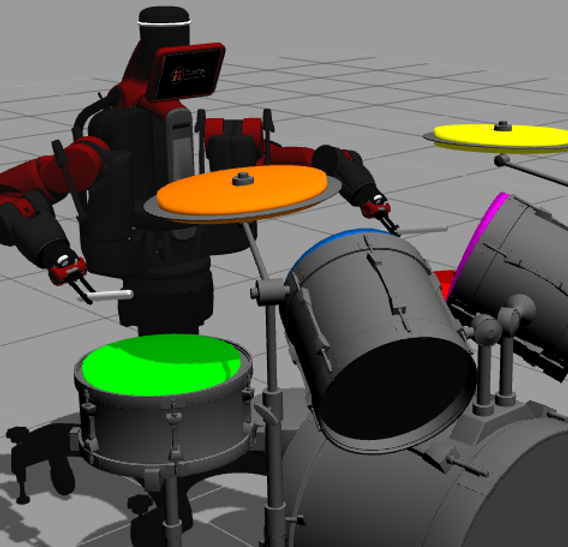

MultiSensory Integration for Sensory Prediction

Picture this: our incredible sensorimotor system is like a secret language that translates sensory information into precise motor commands. It’s the reason we can effortlessly navigate the world around us and interact with our environment. Think about it – when you see a car approaching, you instinctively know to move out of the way because your visual and auditory senses have teamed up to alert you.

But here’s where things get really cool. Our sensorimotor system is so smart that it can handle missing or corrupted data like a champ. Ever had that moment when you somehow sense there’s a car behind you, even without actually seeing it? That’s all thanks to the remarkable resilience of our system.

But wait, there’s more! Our internal model kicks things up a notch by making predictions about what sensory inputs are coming our way. This superpower allows us to anticipate and adapt to unexpected situations. It’s like having a crystal ball that helps us stay one step ahead.

Now, let’s talk robots. If we want a robot to rock the drums like a pro, it needs its very own sensorimotor system. It’s the key to translating signals, predicting what’s coming next, and ensuring our robotic friend can adapt in real-time. So, if you want a drumming robot that can groove to the beat, you better believe it needs a top-notch sensorimotor system.

AI Drones and Aerial Transportation

Prepare to be blown away because this paper takes aerial manipulation to new heights! We’re diving into uncharted territory by exploring the fascinating world of learning transferable contact models for aerial tasks. Imagine unmanned aerial vehicles equipped with cable-suspended grippers that are capable of autonomously computing attach points on never-before-seen payloads for mid-air transportation. It’s truly groundbreaking stuff!

Here’s the exciting part – no one has ever tackled the challenge of generating autonomous contact points for aerial tasks quite like this before. We’ve developed an innovative approach that revolves around the idea of learning a probability density of contacts from just a single demonstration. No fancy handcrafting or ad-hoc heuristics needed. We let the data speak for itself. We’re taking things one step further by adapting this approach specifically for encoding aerial transportation tasks. Say goodbye to manual feature engineering and hello to a streamlined one-shot learning paradigm!

Our models are designed to be rock solid, relying solely on the geometric properties of the payloads computed from a point cloud. They can handle partial views with ease, giving us unmatched versatility. We put our approach to the test in a simulated environment, challenging one or three quadcopters to transport never-before-seen payloads along a designated trajectory. Our method computes contact points and quadcopter configurations on-the-fly for each test, leaving no room for guesswork. To compare our approach, we also included a baseline method based on a modified grasp learning algorithm from existing literature.

The results are mind-blowing! Our approach outshines the competition by producing far superior controllability of the payload during transportation tasks. It’s a game-changer, no doubt about it. As we wrap up this exhilarating journey, we take a moment to discuss the strengths and limitations of our groundbreaking idea. But fear not, because we’re already brainstorming exciting future research directions that will continue to push the boundaries of what’s possible in aerial manipulation. Get ready to witness the future of high-flying robotics!

Contact

Assistant Professor in Computer Science

AI + Robotics Specialist